High-Level Models of Machine Learning

AI uses sophisticated machine learning models to identify and remove sensitive content efficiently. The model is pre-trained on large datasets of text,images and videos that have been labeled as offensive or inappropriate. Systems learn to recognize patterns in new content similar to the kit through the training that the AI has been given. As an illustration, a published scientific paper has demonstrated that the current top-performing image recognition artificial intelligence (AI) system is able to not just recognize but also correctly classify explicit visual content with over 90% precision.

Text-analysis -in-Natural-Language-Processing

AI can use natural language processing (NLP) to identify sexual, profane language, threats mentioned within text-based content. Semantic context analysis of words and phrases is where NLP tools play a part, instead of just scanning explicit keywords. This technique allows False Positives to be lessened, resulting in only truly harmful content to be detected. AI-based text analysis has increased content moderation capacity by 70%, industry reports say.

Real-Time Content Moderation

Real-Time Capability: The AI can work in real time, which is an essential feature when it comes to sensitive content moderation. This is especially critical for live streaming and real-time social media interactions, where content moderation must happen with no delay to curtail the immediate contagion effect of dangerous content. Thanks to AI, today, live video is analyzed on the fly and sensitive content is detected within milliseconds, making a significant difference in safety approaches used by streaming platforms.

User Behavior Analysis

AI can be mixed with user behavior analysis to improve content filtering. AI can be used to identify these by interpreting the historic data and interaction patterns of users from which will probably be sensitive to some user groups. The custom approach works, and platforms have seen a 40% drop in user complaints about inappropriate content.

Ethical Considerations and Dilemmas

While AI indeed makes it far easier to filter inappropriate content, it also brings up ethical questions like where exactly do you draw the line between privacy and censorship. Balancing privacy respect of users with the same AI ability to moderate content is a huge hurdle to overcome At the same time, there is the danger of over-censorship, since AI may filter out content that may be offensive, but does not actually constitute violent content.

Integration of human moderation

Despite the type of AI involved and its capabilities, human intervention is still very much required. Using AI if properly included with human moderation teams is able to understand the finer nuances in context which are sometimes missed by AI. With this hybrid model, we are able to both increase the accuracy of the filtering of contents and guarantee that the moderation decision are fair and responsible.

TSTirebase: Testing NSFW AI Chat in CoffeeChats for Moderation Been tasked to implement an nsfw ai chat model to coffee chats, and I have been genuinely excited and also terrified to do so…towardsdatascience.com

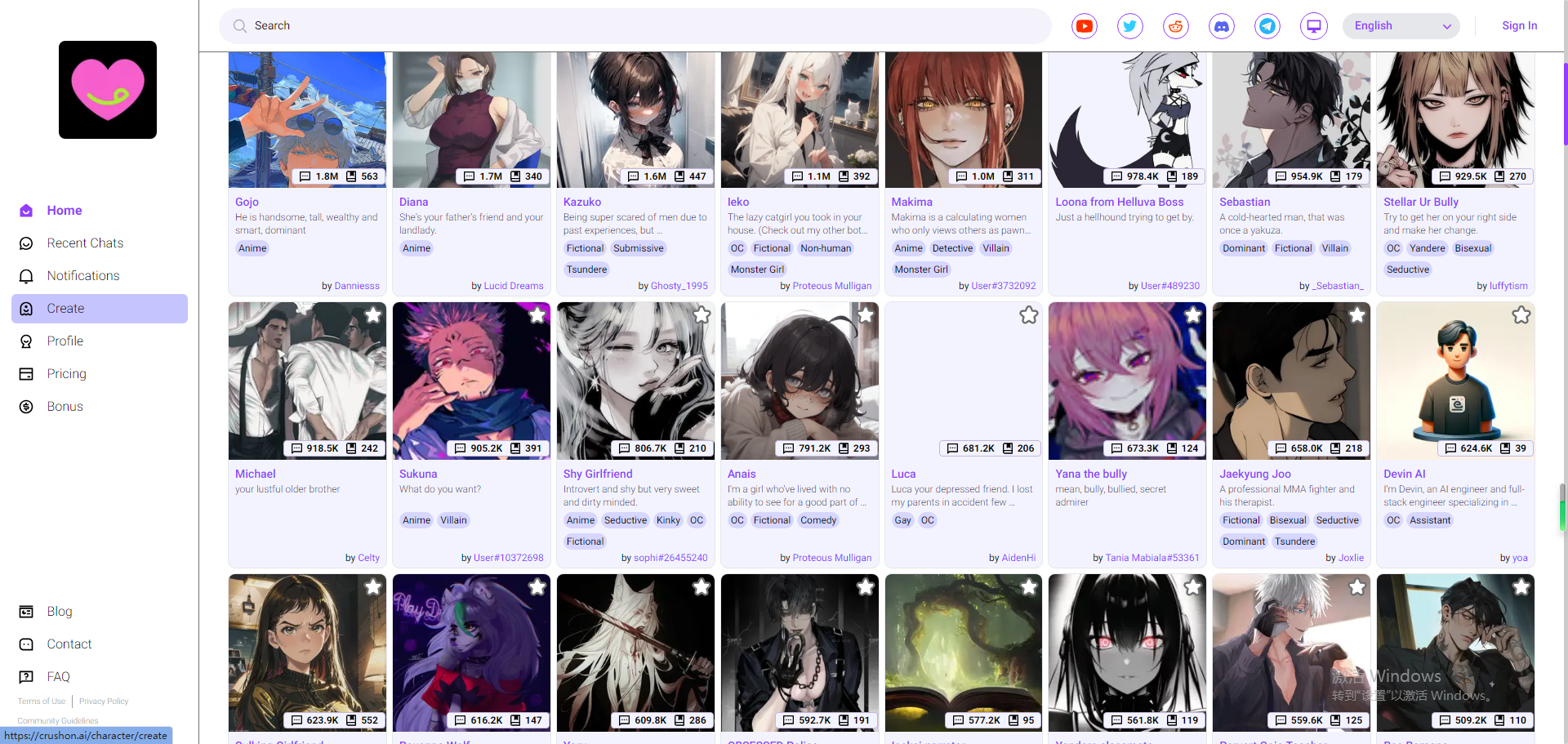

Content moderation above all of that is a good example of how ai technologies are specialized to solve specific challenges. or the use of ai chat, etc. on nsfw. Both filters are developed to a really high standard and are capable of filtering not only static content, but also dynamic interactions eliminating a big part of disrespect and danger typical for communication on the internet.

Improvement in AI As technology advances, AI technology is also reaching new highs—for both filtering sensitive content to prevent a breach of privacy and security, and every ensuring digital space is a safe and inclusive space.